Difference between revisions of "Slicer-3-6-FAQ"

m (Text replacement - "https?:\/\/www.slicer.org\/slicerWiki\/index.php\/([^ ]+) " to "https://www.slicer.org/wiki/$1") |

|||

| (4 intermediate revisions by 2 users not shown) | |||

| Line 59: | Line 59: | ||

*'''Problem:''' My image appears upside down / flipped / facing the wrong way / I have incorrect/missing axis orientation | *'''Problem:''' My image appears upside down / flipped / facing the wrong way / I have incorrect/missing axis orientation | ||

*'''Explanation:''' Slicer presents and interacts with images in ''physical'' space, which differs from the way the image is stored by a separate ''transform'' that defines how large the voxels are and how the image is oriented in space, e.g. which side is left or right. This information is stored in the image header, and different image file formats have different ways of storing this information. If Slicer supports the image format, it should read the information in the header and display the image correctly. If the image appears upside down or with distorted aspect ratio etc, then the image header information is either missing or incorrect. | *'''Explanation:''' Slicer presents and interacts with images in ''physical'' space, which differs from the way the image is stored by a separate ''transform'' that defines how large the voxels are and how the image is oriented in space, e.g. which side is left or right. This information is stored in the image header, and different image file formats have different ways of storing this information. If Slicer supports the image format, it should read the information in the header and display the image correctly. If the image appears upside down or with distorted aspect ratio etc, then the image header information is either missing or incorrect. | ||

| − | *'''Fix:''' See [ | + | *'''Fix:''' See [[Slicer-3-6-FAQ#How_do_I_fix_incorrect_axis_directions.3F_Can_I_flip_an_image_.28left.2Fright.2C_anterior.2Fposterior_etc.29_.3F|FAQ above]] for a way to flip axes inside Slicer. You can also correct the voxel dimensions and the image origin in the Info tab of the [[Modules:Volumes-Documentation-3.6|Volumes module]], and you can reorient images via the [[Modules:Transforms-Documentation-3.6|Transforms module]]. Reorientation however will work only if the incorrect orientation involves rotation or translation. |

*To fix an axis orientation directly in the header info of an image file: | *To fix an axis orientation directly in the header info of an image file: | ||

:1. load the image into slicer (Load Volume, Add Data,Load Scene..) | :1. load the image into slicer (Load Volume, Add Data,Load Scene..) | ||

| Line 75: | Line 75: | ||

*'''Problem:''' My image appears distorted / stretched / with incorrect aspect ratio | *'''Problem:''' My image appears distorted / stretched / with incorrect aspect ratio | ||

*'''Explanation:''' Slicer presents and interacts with images in ''physical'' space, which differs from the way the image is stored by a set of separate information that represents the physical "voxel size" and the direction/spatial orientation of the axes. If the voxel dimensions are incorrect or missing, the image will be displayed in a distorted fashion. This information is stored in the image header. If the information is missing, a default of isotropic 1 x 1 x 1 mm size is assumed for the voxel. | *'''Explanation:''' Slicer presents and interacts with images in ''physical'' space, which differs from the way the image is stored by a set of separate information that represents the physical "voxel size" and the direction/spatial orientation of the axes. If the voxel dimensions are incorrect or missing, the image will be displayed in a distorted fashion. This information is stored in the image header. If the information is missing, a default of isotropic 1 x 1 x 1 mm size is assumed for the voxel. | ||

| − | *'''Fix:''' You can correct the voxel dimensions and the image origin in the Info tab of the [ | + | *'''Fix:''' You can correct the voxel dimensions and the image origin in the Info tab of the [[Modules:Volumes-Documentation-3.6|Volumes module]]. If you know the correct voxel size, enter it in the fields provided and hit RETURN after each entry. You should see the display update immediately. Ideally you should try to maintain the original image header information from the point of acquisition. Sometimes this information is lost in format conversion. Try an alternative converter or image format if you know that the voxel size is correctly stored in the original image. Alternatively you can try to edit the information in the image header, e.g. save the volume as (NRRD (.nhdr) format and open the ".nhdr" file with a text editor. |

== I don't understand your coordinate system. What do the coordinate labels R,A,S and (negative numbers) mean? == | == I don't understand your coordinate system. What do the coordinate labels R,A,S and (negative numbers) mean? == | ||

*It's very important to realize that Slicer displays all images in ''physical'' space, i.e. in mm. This requires orientation and size information that is stored in the image header. How that header info is set and read from the header will determine how the image appears in Slicer. RAS is the abbreviation for ''right'', ''anterior'', ''superior''; indicating in order the relation of the physical axis directions to how the image data is stored. | *It's very important to realize that Slicer displays all images in ''physical'' space, i.e. in mm. This requires orientation and size information that is stored in the image header. How that header info is set and read from the header will determine how the image appears in Slicer. RAS is the abbreviation for ''right'', ''anterior'', ''superior''; indicating in order the relation of the physical axis directions to how the image data is stored. | ||

| − | *For a detailed description on coordinate systems [ | + | *For a detailed description on coordinate systems [[Coordinate_systems|see here]]. |

== My image is very large, how do I downsample to a smaller size? == | == My image is very large, how do I downsample to a smaller size? == | ||

| Line 94: | Line 94: | ||

:9. Click Apply | :9. Click Apply | ||

'''Resampling in place to match another image in size''': | '''Resampling in place to match another image in size''': | ||

| − | :1. Go to the [[Modules:ResampleScalarVectorDWIVolume-Documentation-3.6 '''ResampleScalarVectorDWIVolume''']] module | + | :1. Go to the [[Modules:ResampleScalarVectorDWIVolume-Documentation-3.6|'''ResampleScalarVectorDWIVolume''']] module |

:2. ''Input Volume'': Select the image you wish to resample | :2. ''Input Volume'': Select the image you wish to resample | ||

:3. ''Reference Volume'': Select the reference image whose size/dimensions you want to match to. | :3. ''Reference Volume'': Select the reference image whose size/dimensions you want to match to. | ||

| Line 101: | Line 101: | ||

:6. Click Apply. Note that if the input and reference volume do not overlap in physical space, i.e. are roughly co-registered, the resampled result may not contain any or all of the input image. This is because the program will resample in the space defined by the reference image and will fill in with zeros if there is nothing at that location. If you get an empty or clipped result, that is most likely the cause. In that case try to re-center the two volumes before resampling. | :6. Click Apply. Note that if the input and reference volume do not overlap in physical space, i.e. are roughly co-registered, the resampled result may not contain any or all of the input image. This is because the program will resample in the space defined by the reference image and will fill in with zeros if there is nothing at that location. If you get an empty or clipped result, that is most likely the cause. In that case try to re-center the two volumes before resampling. | ||

'''Resampling in place by specifying new dimensions''': | '''Resampling in place by specifying new dimensions''': | ||

| − | :1. Go to the [[Modules:ResampleScalarVectorDWIVolume-Documentation-3.6 '''ResampleScalarVectorDWIVolume''']] module | + | :1. Go to the [[Modules:ResampleScalarVectorDWIVolume-Documentation-3.6| '''ResampleScalarVectorDWIVolume''']] module |

:2. ''Input Volume'': Select the image you wish to resample | :2. ''Input Volume'': Select the image you wish to resample | ||

:3. ''Reference Volume'': leave at "none" | :3. ''Reference Volume'': leave at "none" | ||

| Line 108: | Line 108: | ||

:6. Click Apply. Note that if the input and reference volume do not overlap in physical space, i.e. are roughly co-registered, the resampled result may not contain any or all of the input image. This is | :6. Click Apply. Note that if the input and reference volume do not overlap in physical space, i.e. are roughly co-registered, the resampled result may not contain any or all of the input image. This is | ||

:6. ''Output Parameters'': here you specify the new voxel size / spacing '''and''' dimensions. Note that you need to set both. If only the voxel size is specified, the image is resampled but retains its original dimensions (i.e. empty/zero space). If only the dimensions are specified the image will be resampled starting at the origin and cropped but not resized. | :6. ''Output Parameters'': here you specify the new voxel size / spacing '''and''' dimensions. Note that you need to set both. If only the voxel size is specified, the image is resampled but retains its original dimensions (i.e. empty/zero space). If only the dimensions are specified the image will be resampled starting at the origin and cropped but not resized. | ||

| − | ::* | + | ::*new voxel size: calculate the new voxel size and enter in the ''Spacing'' field, as described above in in '''Resampling in place'' above, see step #5 |

| − | ::* | + | ::*new image dimensions: enter new dimensions under ''Size''. To prevent clipping, the output field of view FOV = voxel size * image dimensions, should match the input |

:7. leave rest at default and click ''Apply'' | :7. leave rest at default and click ''Apply'' | ||

| Line 116: | Line 116: | ||

*'''Explanation:'''Slicer chooses the field of view (FOV) for the display based on the image selected for the background. The FOV will therefore be centered around what is defined in that image's origin. If two images have origins that differ significantly, they cannot be viewed well simultaneously. | *'''Explanation:'''Slicer chooses the field of view (FOV) for the display based on the image selected for the background. The FOV will therefore be centered around what is defined in that image's origin. If two images have origins that differ significantly, they cannot be viewed well simultaneously. | ||

*'''Fix:''' recenter one or both images as follows: | *'''Fix:''' recenter one or both images as follows: | ||

| − | :1. Go to the [ | + | :1. Go to the [[Modules:Volumes-Documentation-3.6|Volumes module]], |

:2. Select the image to recenter from the ''Actrive Volume'' menu | :2. Select the image to recenter from the ''Actrive Volume'' menu | ||

:3. Select the ''Info'' tab. | :3. Select the ''Info'' tab. | ||

| Line 132: | Line 132: | ||

== How do I initialize/align images with very different orientations and no overlap? == | == How do I initialize/align images with very different orientations and no overlap? == | ||

I would like to register two datasets, but the centers of the two images are so different that they don't overlap at all. Is there a way to pre-register them automatically or manually to create an initial starting transformation?<br> | I would like to register two datasets, but the centers of the two images are so different that they don't overlap at all. Is there a way to pre-register them automatically or manually to create an initial starting transformation?<br> | ||

| − | *'''Automatic Initialization:'''Most registration tools have initializers that should take care of the initial alignment in a scenario you described. If your images do not overlap at all, but the extents are similar, centered initializer should work. If the structures that are shown in the images are similar, moments initializer might help. Both of these options are available in [ | + | *'''Automatic Initialization:'''Most registration tools have initializers that should take care of the initial alignment in a scenario you described. If your images do not overlap at all, but the extents are similar, centered initializer should work. If the structures that are shown in the images are similar, moments initializer might help. Both of these options are available in [[Modules:BRAINSFit|BRAINSFit]] (GeometryCenterAlign and MomentsAlign, respectively). You can run BRAINSFit with just the initializer to see what kind of transformation it produces. |

| − | *'''Manual Initialization:''' Use the ''Transforms'' module to create a manual initialization. Details in the [ | + | *'''Manual Initialization:''' Use the ''Transforms'' module to create a manual initialization. Details in the [[Slicer3.6:Training| manual registration tutorial]] and also in the FAQ below. |

== Can I manually adjust or correct a registration? == | == Can I manually adjust or correct a registration? == | ||

*'''Problem:''' obtained registration is insufficient | *'''Problem:''' obtained registration is insufficient | ||

*'''Explanation:''' The automated registration algorithms (except for fiducial and surface registration) in Slicer operate on image intensity and try to move images so that similar image content is aligned. This is influenced by many factors such as image contrast, resolution, voxel anisotropy, artifacts such as motion or intensity inhomogeneity, pathology etc, the initial misalignment and the parameters selected for the registration. | *'''Explanation:''' The automated registration algorithms (except for fiducial and surface registration) in Slicer operate on image intensity and try to move images so that similar image content is aligned. This is influenced by many factors such as image contrast, resolution, voxel anisotropy, artifacts such as motion or intensity inhomogeneity, pathology etc, the initial misalignment and the parameters selected for the registration. | ||

| − | *'''Fix:''' you can adjust/correct an obtained registration manually, within limits. As outlined below. Your first try however should be to obtain a better automated registration by changing some of the input and/parameters and then re-run. There's also a dedicated [ | + | *'''Fix:''' you can adjust/correct an obtained registration manually, within limits. As outlined below. Your first try however should be to obtain a better automated registration by changing some of the input and/parameters and then re-run. There's also a dedicated [[Slicer_3.6:Training|Manual Registration Tutorial]]. |

| − | **'''Manual Adjustment''': If the transform is linear, i.e. a rigid or affine transform, you can access the rigid components (translation and rotation) of that transform via the [ | + | **'''Manual Adjustment''': If the transform is linear, i.e. a rigid or affine transform, you can access the rigid components (translation and rotation) of that transform via the [[Modules:Transforms-Documentation-3.6|Transforms module]]. |

***#In the ''Data'' module, drag the image volume inside the registration transform node | ***#In the ''Data'' module, drag the image volume inside the registration transform node | ||

***#Select the views so that the volume is displayed in the slice views | ***#Select the views so that the volume is displayed in the slice views | ||

| − | ***#Go to the [ | + | ***#Go to the [[Modules:Transforms-Documentation-3.6|Transforms module]] and adjust the translation and rotation sliders to adjust the current position. To get a finer degree of control, enter smaller numbers for the translation limits and enter rotation angles numerically in increments of a few degrees at a time |

== What's the difference between the various registration methods listed in Slicer? == | == What's the difference between the various registration methods listed in Slicer? == | ||

| − | Most of the registration modules use the same underlying ITK registration algorithm for cost function and optimization, but differ in implementation on parameter selection, initialization and the type of image toward which they have been tailored. To help choose the best one for you '''based on the method or available options''', an [ | + | Most of the registration modules use the same underlying ITK registration algorithm for cost function and optimization, but differ in implementation on parameter selection, initialization and the type of image toward which they have been tailored. To help choose the best one for you '''based on the method or available options''', an [[Slicer3:Registration#Registration_in_3D_Slicer|overview of all registration methods]] incl. [[Registration:Categories|a '''comparison matrix''' can be found here]]. <br> |

To help choose '''based on a particular image type and content''', you will find many example cases incl. step-by-step instructions and discussions on the particular registration challenges in the [http://na-mic.org/Wiki/index.php/Projects:RegistrationDocumentation:UseCaseInventory '''Slicer Registration Library''']. The library is organized in several different ways, e.g. consult this [http://na-mic.org/Wiki/index.php/Projects:RegistrationDocumentation:RegLibTable '''sortable table''' with all cases and the method used].<br> | To help choose '''based on a particular image type and content''', you will find many example cases incl. step-by-step instructions and discussions on the particular registration challenges in the [http://na-mic.org/Wiki/index.php/Projects:RegistrationDocumentation:UseCaseInventory '''Slicer Registration Library''']. The library is organized in several different ways, e.g. consult this [http://na-mic.org/Wiki/index.php/Projects:RegistrationDocumentation:RegLibTable '''sortable table''' with all cases and the method used].<br> | ||

There is also a brief overview within Slicer that helps distinguish: Select the ''Registration Welcome'' option at the top of the Modules/Registration menu. | There is also a brief overview within Slicer that helps distinguish: Select the ''Registration Welcome'' option at the top of the Modules/Registration menu. | ||

| Line 154: | Line 154: | ||

*'''Note:''' masking within the registration is different from feeding a masked/stripped image as input, where areas of no interest have been erased. Such masking can still produce valuable results and is a viable option if the module in question does not provide a direct masking option. But direct masking by erasing portions of the image content can produce sharp edges that registration methods can lock onto. If the edge becomes dominant then the resulting registration will be only as good as the accuracy of the masking. That problem does not occur when using masking option within the module. | *'''Note:''' masking within the registration is different from feeding a masked/stripped image as input, where areas of no interest have been erased. Such masking can still produce valuable results and is a viable option if the module in question does not provide a direct masking option. But direct masking by erasing portions of the image content can produce sharp edges that registration methods can lock onto. If the edge becomes dominant then the resulting registration will be only as good as the accuracy of the masking. That problem does not occur when using masking option within the module. | ||

*The following modules currently (v.3.6.1) provide masking: | *The following modules currently (v.3.6.1) provide masking: | ||

| − | **[ | + | **[[Modules:BRAINSFit|'''BRAINSFit''']] |

***found under: ''Masking Options'' tab | ***found under: ''Masking Options'' tab | ||

***requires mask for both fixed and moving image | ***requires mask for both fixed and moving image | ||

***has option to automatically generate masks | ***has option to automatically generate masks | ||

***some initialization modes (''CenterOfHeadAlign'') will not work in conjunction with masks | ***some initialization modes (''CenterOfHeadAlign'') will not work in conjunction with masks | ||

| − | **[ | + | **[[Modules:RegisterImages-Documentation-3.6|'''Expert Automated Registration''']] |

***found under: ''Advanced Registration Parameters'' tab | ***found under: ''Advanced Registration Parameters'' tab | ||

***requires mask for fixed image only | ***requires mask for fixed image only | ||

| − | **[ | + | **[[Modules:RegisterImagesMultiRes-Documentation-3.6|'''Robust Multiresolution Affine''']] |

***found under: ''Optional'' tab | ***found under: ''Optional'' tab | ||

***requires mask for fixed image only | ***requires mask for fixed image only | ||

***provides option to define a mask as a box ROI | ***provides option to define a mask as a box ROI | ||

| − | **[ | + | **[[Modules:BRAINSDemonWarp|'''BRAINSDemonWarp''']] |

***found under: ''Mask Options'' tab | ***found under: ''Mask Options'' tab | ||

***requires mask for both fixed and moving image | ***requires mask for both fixed and moving image | ||

| Line 185: | Line 185: | ||

*'''Explanation:''' The automated registration algorithms (except for fiducial and surface registration) in Slicer operate on image intensity and try to move images so that similar image content is aligned. This is influenced by many factors such as image contrast, resolution, voxel anisotropy, artifacts such as motion or intensity inhomogeneity, pathology etc, the initial misalignment and the parameters selected for the registration. | *'''Explanation:''' The automated registration algorithms (except for fiducial and surface registration) in Slicer operate on image intensity and try to move images so that similar image content is aligned. This is influenced by many factors such as image contrast, resolution, voxel anisotropy, artifacts such as motion or intensity inhomogeneity, pathology etc, the initial misalignment and the parameters selected for the registration. | ||

*'''Fix:''' Your first try however should be to obtain a better automated registration by changing some of to re-run the automated registration, while changing either initial position, initialization method, parameters or the method/module used. Most helpful to determine a good secondary approach is to know why the first one was likely to fail. Below a list of possible reasons and the remedies: | *'''Fix:''' Your first try however should be to obtain a better automated registration by changing some of to re-run the automated registration, while changing either initial position, initialization method, parameters or the method/module used. Most helpful to determine a good secondary approach is to know why the first one was likely to fail. Below a list of possible reasons and the remedies: | ||

| − | **'''too much initial misalignment:''' particularly rotation can be difficult for automated registration to capture. If the two images have strong rotational misalignment, consider A) one of the initialization options (e.g. [ | + | **'''too much initial misalignment:''' particularly rotation can be difficult for automated registration to capture. If the two images have strong rotational misalignment, consider A) one of the initialization options (e.g. [[Modules:BRAINSFit|BRAINSfit]] or [[Modules:RegisterImages-Documentation-3.6|Expert Automated]]), B) a manual initial alignment using the [[Modules:Transforms-Documentation-3.6|Transforms module]] and then use this as initialization input |

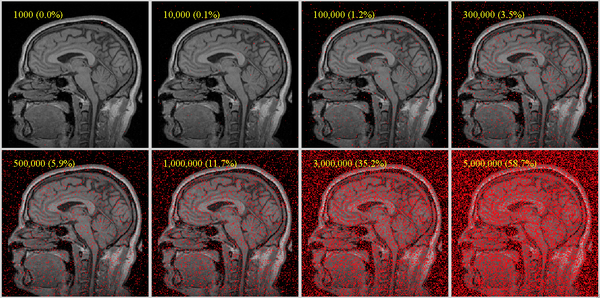

**'''insufficient detail:''' consider increasing the number of sample points used for the registration, depending on time/speed constraints, increase to 5-10% of image size. | **'''insufficient detail:''' consider increasing the number of sample points used for the registration, depending on time/speed constraints, increase to 5-10% of image size. | ||

**'''insufficient contrast:''' consider adjusting the ''Histogram Bins'' (where avail.) to tune the algorithm to weigh small intensity variations more or less heavily | **'''insufficient contrast:''' consider adjusting the ''Histogram Bins'' (where avail.) to tune the algorithm to weigh small intensity variations more or less heavily | ||

**'''strong anisotropy:''' if one or both of the images have strong voxel anisotropy of ratios 5 or more, rotational alignment may become increasingly difficult for an automated method. Consider increasing the sample points and reducing the ''Histogram Bins''. In extreme cases you may need to switch to a manual or fiducial-based approach | **'''strong anisotropy:''' if one or both of the images have strong voxel anisotropy of ratios 5 or more, rotational alignment may become increasingly difficult for an automated method. Consider increasing the sample points and reducing the ''Histogram Bins''. In extreme cases you may need to switch to a manual or fiducial-based approach | ||

| − | **'''distracting image content:''' pathology, strong edges, clipped FOV with image content at the border of the image can easily dominate the cost function driving the registration algorithm. '''Masking''' is a powerful remedy for this problem: create a mask (binary labelmap/segmentation) that excludes the distracting parts and includes only those areas of the image where matching content exists. This requires one of the modules that supports masking input, such as: [ | + | **'''distracting image content:''' pathology, strong edges, clipped FOV with image content at the border of the image can easily dominate the cost function driving the registration algorithm. '''Masking''' is a powerful remedy for this problem: create a mask (binary labelmap/segmentation) that excludes the distracting parts and includes only those areas of the image where matching content exists. This requires one of the modules that supports masking input, such as: [[Modules:BRAINSFit|BRAINSFit]], [[Modules:RegisterImages-Documentation-3.6|ExpertAutomated]], [[Modules:RegisterImagesMultiRes-Documentation-3.6|Multi Resolution]]. Next best thing to use with modules that do not support masking is to mask the image manually and create a temporary masked image where the excluded content is set to 0 intensity; the ''Mask Volume'' module performs this task |

| − | **'''too many/too few DOF''': the degrees of freedom (DOF) determine how much motion is allowed for the image to be registered. Too few DOF results in suboptimal alignment; too many DOF can result in overfitting or the algorithm getting stuck in local extrema, or a bad fit with some local detail matched but the rest misaligned. Consider a stepwise approach where the DOF are gradually increased. [ | + | **'''too many/too few DOF''': the degrees of freedom (DOF) determine how much motion is allowed for the image to be registered. Too few DOF results in suboptimal alignment; too many DOF can result in overfitting or the algorithm getting stuck in local extrema, or a bad fit with some local detail matched but the rest misaligned. Consider a stepwise approach where the DOF are gradually increased. [[Modules:BRAINSFit|BRAINSfit]] and [[Modules:RegisterImages-Documentation-3.6|Expert Automated]] provide such pipelines; or you can nest the transforms manually. A multi-resolution approach can also greatly benefit difficult registration challenges: this scheme runs multiple registrations at increasing amounts of image detail. The [[Modules:RegisterImagesMultiRes-Documentation-3.6|Robust Multiresolution module]] performs this task. |

| − | **'''inappropriate algorithm:''' there are many different registration methods available in Slicer. Have a look at the [ | + | **'''inappropriate algorithm:''' there are many different registration methods available in Slicer. Have a look at the [[Slicer3:Registration|'''Registration Method Overview''']] and consider one of the alternatives. Also review the [http://na-mic.org/Wiki/index.php/Projects:RegistrationDocumentation:RegLibTable '''sortable table''' in the Registration Case Library] to see which methods were successfully used on cases matching your own. |

**you can adjust/correct an obtained registration manually, within limits, as outlined [[Slicer3:FAQ#Can I manually adjust or correct a registration?|'''in this FAQ''']]. | **you can adjust/correct an obtained registration manually, within limits, as outlined [[Slicer3:FAQ#Can I manually adjust or correct a registration?|'''in this FAQ''']]. | ||

| Line 321: | Line 321: | ||

== I want to register two images with different intensity/contrast. == | == I want to register two images with different intensity/contrast. == | ||

| − | *The two most critical image features that determine automated registration accuracy and robustness are image contrast and resolution. Differences in image contrast are best addressed with the appropriate cost function. The cost function that has proven most reliable for registering images with different contrast (e.g. a T1 MRI to a T2 or an MRI to CT or PET) is '''mutual information'''. All intensity-based registration modules use mutual information as the default cost function. Only the [ | + | *The two most critical image features that determine automated registration accuracy and robustness are image contrast and resolution. Differences in image contrast are best addressed with the appropriate cost function. The cost function that has proven most reliable for registering images with different contrast (e.g. a T1 MRI to a T2 or an MRI to CT or PET) is '''mutual information'''. All intensity-based registration modules use mutual information as the default cost function. Only the [[Modules:RegisterImages-Documentation-3.6|''Expert Automated Registration'']] module lets you choose alternative cost function. |

No extra adjustment is therefore needed in terms of adjusting parameters to register images of different contrast. Depending on the amount of differences you may consider masking to exclude distracting image content or adjusting the ''Histogram Bins'' setting to increase/decrease the level of intensity detail the algorithm is aware of. | No extra adjustment is therefore needed in terms of adjusting parameters to register images of different contrast. Depending on the amount of differences you may consider masking to exclude distracting image content or adjusting the ''Histogram Bins'' setting to increase/decrease the level of intensity detail the algorithm is aware of. | ||

== How important is bias field correction / intensity inhomogeneity correction? == | == How important is bias field correction / intensity inhomogeneity correction? == | ||

| − | While registration may still succeed with mild cases of inhomogeneity, moderate to severe inhomogeneity can negatively affect automated registration quality. It is recommended to run an automated bias-field correction on both images before registration. [ | + | While registration may still succeed with mild cases of inhomogeneity, moderate to severe inhomogeneity can negatively affect automated registration quality. It is recommended to run an automated bias-field correction on both images before registration. [[Modules:N4ITKBiasFieldCorrection-Documentation-3.6|see here for documentation on this module]]. Masking of non-essential peripheral structures can also help to reduce distracting image content. [[Slicer-3-6-FAQ#What.27s_the_purpose_of_masking_.2F_VOI_in_registration.3F|See here for more on masking]] |

== Have the Slicer registration methods been validated? == | == Have the Slicer registration methods been validated? == | ||

| Line 337: | Line 337: | ||

== Registration is too slow. How can I speed up my registration? == | == Registration is too slow. How can I speed up my registration? == | ||

| − | The key parameters that influence registration speed are the number of sample points, the degrees of freedom of the transform, the type of similarity metric and the initial misalignment and image contrast/content differences. If registration quality is ok, try reducing the sample points first. [ | + | The key parameters that influence registration speed are the number of sample points, the degrees of freedom of the transform, the type of similarity metric and the initial misalignment and image contrast/content differences. If registration quality is ok, try reducing the sample points first. [[Slicer-3-6-FAQ#How_many_sample_points_should_I_choose_for_my_registration.3F|Guidelines on selecting sample points are given here]]. The degrees of freedom usually are given by the overall task and not subject to variation, but depending on initial misalignment, both speed and robustness can improve by an iterative approach that gradually increases DOF rather than starting with a high DOF setting. The [[Modules:BRAINSFit|BRAINSfit module]] and [[Modules:RegisterImages-Documentation-3.6|Expert Automated Registration module]] both allow prescriptions of iterative DOF.<br> |

If using a cost/criterion function other than mutual information, note that the corresponding ITK implementation may not have been parallelized and hence may not be taking advantage of multi-threading/multi-CPU cores on your computer. This can slow down performance significantly. <br> | If using a cost/criterion function other than mutual information, note that the corresponding ITK implementation may not have been parallelized and hence may not be taking advantage of multi-threading/multi-CPU cores on your computer. This can slow down performance significantly. <br> | ||

| − | [ | + | [[Slicer3:Registration|Also see the ''Slicer Registration Portal Page'' for help on selecting registration methods based on criteria of speed, precision etc.]] |

== Registration results are inconsistent and don't work on some image pairs. Are there ways to make registration more robust? == | == Registration results are inconsistent and don't work on some image pairs. Are there ways to make registration more robust? == | ||

| − | The key parameters that influence registration robustness are the number of sample points, the initial degrees of freedom of the transform, the type of similarity metric and the initial misalignment and image contrast/content differences. particularly initialization methods that seek a first alignment before beginning the optimization can make things worse. If initial position is already sufficiently close (i.e. more than 70% overlap and less than 20% rotational misalignment), consider turning off initialization if available (e.g. in [ | + | The key parameters that influence registration robustness are the number of sample points, the initial degrees of freedom of the transform, the type of similarity metric and the initial misalignment and image contrast/content differences. particularly initialization methods that seek a first alignment before beginning the optimization can make things worse. If initial position is already sufficiently close (i.e. more than 70% overlap and less than 20% rotational misalignment), consider turning off initialization if available (e.g. in [[Modules:BRAINSFit|BRAINSfit]] or [[Modules:RegisterImages-Documentation-3.6|Expert Automated]]). |

| − | Try increasing the sample points. [ | + | Try increasing the sample points. [[Slicer-3-6-FAQ#How_many_sample_points_should_I_choose_for_my_registration.3F|Guidelines on selecting sample points are given here]]. The degrees of freedom usually are given by the overall task and not subject to variation, but depending on initial misalignment, robustness can greatly improve by an iterative approach that gradually increases DOF rather than starting with a high DOF setting. The [[Modules:BRAINSFit|BRAINSfit module]] and [[Modules:RegisterImages-Documentation-3.6|Expert Automated Registration module]] both allow prescriptions of iterative DOF. Also the [[Modules:RegisterImagesMultiRes-Documentation-3.6|Multiresolution]] module steps through multiple cycles of different image coarseness which is aimed primarily at robustness<br> |

If using a cost/criterion function other than mutual information, note that MI tends to be the most forgiving/robust toward differences in image contrast. <br> | If using a cost/criterion function other than mutual information, note that MI tends to be the most forgiving/robust toward differences in image contrast. <br> | ||

| − | [ | + | [[Slicer3:Registration|Also see the ''Slicer Registration Portal Page'' for help on selecting registration methods based on criteria of robustness, speed, precision etc.]] |

== One of my images has a clipped field of view. Can I still use automated registration? == | == One of my images has a clipped field of view. Can I still use automated registration? == | ||

| Line 353: | Line 353: | ||

There are 2 ways to see the result of a registration: 1) by creating a new resampled volume that represents the moving image in the new orientation , 2) by direct (dynamic) rendering of the original image in a new space when placed inside a transform. The latter is '''not''' available for non-rigid transforms, hence a registration that includes nonlinear components (BSpline, Warp) must be visualized by first resampling the entire volume with the new transform. If you ran a registration yet see no effect, the reason could be one of the following: | There are 2 ways to see the result of a registration: 1) by creating a new resampled volume that represents the moving image in the new orientation , 2) by direct (dynamic) rendering of the original image in a new space when placed inside a transform. The latter is '''not''' available for non-rigid transforms, hence a registration that includes nonlinear components (BSpline, Warp) must be visualized by first resampling the entire volume with the new transform. If you ran a registration yet see no effect, the reason could be one of the following: | ||

*you did not request an output. In the module parameters section, you must specify either an output transform or an output image/volume. Select one or both (for nonrigid registration). | *you did not request an output. In the module parameters section, you must specify either an output transform or an output image/volume. Select one or both (for nonrigid registration). | ||

| − | *you requested a result transform but the moving volume is not placed inside the transform in the MRML tree. Not all modules automatically place the moving image inside the result transform node: The [ | + | *you requested a result transform but the moving volume is not placed inside the transform in the MRML tree. Not all modules automatically place the moving image inside the result transform node: The [[Modules:RegisterImages-Documentation-3.6|Expert Automated Module]], for example, does not , so in that case do this manually in the ''Data'' module by dragging the moving volume node inside the result transform. |

*registration completed with an error. Check for status messages at the top of the module and look at the ''Error Log'' in the window menu to see if there were any errors reported. | *registration completed with an error. Check for status messages at the top of the module and look at the ''Error Log'' in the window menu to see if there were any errors reported. | ||

| − | *you performed a non-rigid (BSpline) transform but did not yet request an output volume. To do so after registration is complete, use the [ | + | *you performed a non-rigid (BSpline) transform but did not yet request an output volume. To do so after registration is complete, use the [[Modules:ResampleScalarVectorDWIVolume-Documentation-3.6|Resample DWI/Vector module]]. |

== What's the difference between Rigid and Affine registration? == | == What's the difference between Rigid and Affine registration? == | ||

| Line 364: | Line 364: | ||

== The nonrigid (BSpline) registration transform does not seem to be nonrigid or does not show up correctly. == | == The nonrigid (BSpline) registration transform does not seem to be nonrigid or does not show up correctly. == | ||

| − | See FAQ on viewing registration results [ | + | See FAQ on viewing registration results [[Slicer-3-6-FAQ#I_ran_a_registration_but_cannot_see_the_result._How_do_I_visualize_the_result_transform.3F|here]]. |

| − | BSpline transforms are '''not''' available for immediate rendering by placing volumes or models inside the transforms. Only linear transforms can be viewed that way. A BSpline transform must be visualized by resampling the entire volume with the new transform. If you did not yet an output volume when running the registration, you can do so after the fact, use the [ | + | BSpline transforms are '''not''' available for immediate rendering by placing volumes or models inside the transforms. Only linear transforms can be viewed that way. A BSpline transform must be visualized by resampling the entire volume with the new transform. If you did not yet an output volume when running the registration, you can do so after the fact, use the [[Modules:ResampleScalarVectorDWIVolume-Documentation-3.6|Resample DWI/Vector module]]. |

== Can I combine multiple registrations? == | == Can I combine multiple registrations? == | ||

| Line 375: | Line 375: | ||

== What's the difference between BRAINSfit and BRAINSDemonWarp? == | == What's the difference between BRAINSfit and BRAINSDemonWarp? == | ||

| − | [ | + | [[Modules:BRAINSFit|BRAINSfit]] performs affine and BSpline registration that commonly will have less than a few hundred DOF, whereas [[Modules:BRAINSDemonWarp|BRAINSDemonWarp]] performs a optic flow high-DOF warping scheme that has many thousands of DOF and is significantly less constrained. |

== Is the BRAINSfit registration for brain images only? == | == Is the BRAINSfit registration for brain images only? == | ||

| Line 384: | Line 384: | ||

== Which registration methods offer non-rigid transforms? == | == Which registration methods offer non-rigid transforms? == | ||

| − | If including ''Affine'' as nonrigid, then all except the ''Fast Rigid'' module do support non-rigid transforms. In the more common understanding, non-rigid transforms with more than 12 DOF are offered by the [ | + | If including ''Affine'' as nonrigid, then all except the ''Fast Rigid'' module do support non-rigid transforms. In the more common understanding, non-rigid transforms with more than 12 DOF are offered by the [[Modules:DeformableB-SplineRegistration-Documentation-3.6|Fast BSpline]], [[Modules:BRAINSFit|BRAINSFit]], [[Modules:RegisterImages-Documentation-3.6|Expert Automated]], and [[Modules:BRAINSDemonWarp|BRAINSDemonWarp]] modules, as well as by the extensions like [[Modules:PlastimatchDICOMRT|Plastimatch]] or [[Modules:HammerRegistration|HAMMER]].<br> |

| − | For a detailed review on specific registration features consult the [ | + | For a detailed review on specific registration features consult the [[Registration:Categories|'''Slicer Registration Portal Page''']]. |

== Which registration methods offer masking? == | == Which registration methods offer masking? == | ||

| − | Masking is supported directly by the [ | + | Masking is supported directly by the [[Modules:RegisterImages-Documentation-3.6|Expert Automated]] module, [[Modules:BRAINSFit|BRAINSfit]] and [[Modules:RegisterImagesMultiRes-Documentation-3.6|Robust Multiresolution]] module. Note that the use of masks differs among these: |

| − | :*[ | + | :*[[Modules:RegisterImages-Documentation-3.6|Expert Automated]] module: requires binary mask for fixed image only. |

| − | :*[ | + | :*[[Modules:RegisterImagesMultiRes-Documentation-3.6|Robust Multiresolution]] module: also allows mask in the form of simple ROI block; requires mask for fixed image only. |

| − | :*[ | + | :*[[Modules:BRAINSFit|BRAINSfit]] : requires mask for both the fixed and moving image. |

| − | For a detailed review on specific registration features consult the [ | + | For a detailed review on specific registration features consult the [[Registration:Categories|'''Slicer Registration Portal Page''']]. |

== Is there a function to convert a box ROI into a volume labelmap? == | == Is there a function to convert a box ROI into a volume labelmap? == | ||

| − | Yes. Most registration functions that offer masking require a binary labelmap as mask input. This goes for the [ | + | Yes. Most registration functions that offer masking require a binary labelmap as mask input. This goes for the [[Modules:BRAINSFit|BRAINSfit]] and [[Modules:RegisterImages-Documentation-3.6|Expert Automated]] modules. The exception is the [[Modules:RegisterImagesMultiRes-Documentation-3.6|Robust Multiresolution]] module, which allows a simple ROI box as mask also. |

| − | The [ | + | The [[Modules:CropVolume-Documentation-3.6|Crop Volume]] module will generate a labelmap from a defined box. You can create a new ROI box or select an existing one. You must select an image volume to crop for the operation, even if you're only interested in the ROI labelmap. You need not select a dedicated output for the labelmap, it is generated automatically when the cropped volume is produced, and will be called ''Subvolume_ROI_Label'' in the MRML tree. After creating the box ROI labelmap, simply delete the cropped volume and other output like the "''...resample-scale-1.0"'' volume.<br> |

| − | Likely you will need the volume with the same dimension and pixel spacing as the reference image. The box volume produced above has the correct dimension, but is only 1 voxel in size. Hence there is a second step required, which is to resample the ''Subvolume_ROI_Label'' to the same resolution: use the [ | + | Likely you will need the volume with the same dimension and pixel spacing as the reference image. The box volume produced above has the correct dimension, but is only 1 voxel in size. Hence there is a second step required, which is to resample the ''Subvolume_ROI_Label'' to the same resolution: use the [[Modules:ResampleScalarVectorDWIVolume-Documentation-3.6|Resample ScalarVectorDWI]] module and select the appropriate reference and ''Nearest Neighbor'' as interpolation method. Finally go to the ''Volumes'' module and check the ''Labelmap'' box in the info tab to turn the volume into a labelmap. |

== BRAINSDemonWarp won't save the result transform == | == BRAINSDemonWarp won't save the result transform == | ||

| − | [ | + | [[Modules:BRAINSDemonWarp|BRAINSDemonWarp]] is a non-rigid registration method that is memory and computation intensive. Depending on image size & system, you may reach memory limits. Open the ''Error Log'' dialog (Window menu) and select the "BRAINSDemon Warp output" line to see the specific error that is being reported there. If you get an error other than the ones discussed here please send it to the [mailto:slicer-users@bwh.harvard.edu Slicer User Mailing List]. <br> |

A memory error would look something like this: | A memory error would look something like this: | ||

vtkCommandLineModuleLogic (0x82a0d40): BRAINSDemonWarp standard error: | vtkCommandLineModuleLogic (0x82a0d40): BRAINSDemonWarp standard error: | ||

| Line 413: | Line 413: | ||

If the error log reports that BRAINSDemonWarp itself completed ok, but sending the transform file back to Slicer failed, that is related to the mentioned limitation in handling deformation fields in the GUI. In that case use the commandline option to save the deformation field to a file for later use. | If the error log reports that BRAINSDemonWarp itself completed ok, but sending the transform file back to Slicer failed, that is related to the mentioned limitation in handling deformation fields in the GUI. In that case use the commandline option to save the deformation field to a file for later use. | ||

== Physical Space vs. Image Space: how do I align two registered images to the same image grid? == | == Physical Space vs. Image Space: how do I align two registered images to the same image grid? == | ||

| − | Slicer displays all data in a physical coordinate system. Hence an image can only be displayed correctly if it contains sufficient header information to relate the image voxel grid with physical space. This includes voxel size, axis orientation and scan order. It is therefore possible for two images to be aligned when viewed in Slicer, even though their underlying image grid is oriented very differently. To match the two images in image as well as physical space, the abovementioned axis direction, voxel size and image grid orientation must match. The procedure will depend on the image data, but the main tools at your disposal are the [ | + | Slicer displays all data in a physical coordinate system. Hence an image can only be displayed correctly if it contains sufficient header information to relate the image voxel grid with physical space. This includes voxel size, axis orientation and scan order. It is therefore possible for two images to be aligned when viewed in Slicer, even though their underlying image grid is oriented very differently. To match the two images in image as well as physical space, the abovementioned axis direction, voxel size and image grid orientation must match. The procedure will depend on the image data, but the main tools at your disposal are the [[Modules:ResampleScalarVectorDWIVolume-Documentation-3.6|ResampleScalarVectorDWIVolume]] and [[Modules:OrientImages-Documentation-3.6|Orient Images]] module. |

== Is there a way to perform an Eddy current correction on DWI in Slicer == | == Is there a way to perform an Eddy current correction on DWI in Slicer == | ||

There is a way, you need to download the GTRACT extension (you can get it from the menu View->Extension Manager) | There is a way, you need to download the GTRACT extension (you can get it from the menu View->Extension Manager) | ||

| Line 441: | Line 441: | ||

-0.0000 -0.4985 0.8669 -278.7091 | -0.0000 -0.4985 0.8669 -278.7091 | ||

0 0 0 1.0000 | 0 0 0 1.0000 | ||

| − | :3. LPS to RAS conversion will take you all the way to what is the file. | + | :3. LPS to RAS conversion will take you all the way to what is the file, i.e. pre and post multiply inv(c) by the respective matrices. |

== How can I see the parameters of the function that describe a BSpline registration/deformation? == | == How can I see the parameters of the function that describe a BSpline registration/deformation? == | ||

To see the parameters of the transform, you have to write it to file and investigate by other means. The BSpline transform is saved as a ITK .tfm which is a text file containing the displacement vectors of each grid-point, plus any initial affine transform (if present). One nice way to visualize is to create a grid image of the same dimensions as your target, and then apply the transform to this grid image, you can then see the deformations as deformations in the gridlines. [http://na-mic.org/Wiki/index.php/Projects:RegistrationDocumentation:UseCaseInventory:Auxiliary Example Grid images can be downloaded here]. | To see the parameters of the transform, you have to write it to file and investigate by other means. The BSpline transform is saved as a ITK .tfm which is a text file containing the displacement vectors of each grid-point, plus any initial affine transform (if present). One nice way to visualize is to create a grid image of the same dimensions as your target, and then apply the transform to this grid image, you can then see the deformations as deformations in the gridlines. [http://na-mic.org/Wiki/index.php/Projects:RegistrationDocumentation:UseCaseInventory:Auxiliary Example Grid images can be downloaded here]. | ||

Use the Modules:ResampleScalarVectorDWIVolume for the resampling. | Use the Modules:ResampleScalarVectorDWIVolume for the resampling. | ||

| − | + | https://www.slicer.org/wiki/Modules:ResampleScalarVectorDWIVolume-Documentation-3.6A quick way directly in slicer is to place the undeformed and deformed volumes into back- and foreground and fade back and forth with the fading slider. | |

| − | + | Another alternative is to convert the transform into a 4-D deformation field directly and visualize it in slicer using RGB color. [[Slicer-3-6-FAQ#How_can_I_convert_a_BSpline_transform_into_a_deformation_field.3F|See FAQ below on how to convert.]] | |

| − | Another alternative is to convert the transform into a 4-D deformation field directly and visualize it in slicer using RGB color. [ | ||

== How can I convert a BSpline transform into a deformation field? == | == How can I convert a BSpline transform into a deformation field? == | ||

| Line 460: | Line 459: | ||

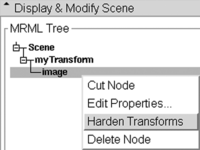

[[Image:HardenTransform.png|200px|left|right click on the image and select "Harden Transform" from the popup menu to reorient an image]] | [[Image:HardenTransform.png|200px|left|right click on the image and select "Harden Transform" from the popup menu to reorient an image]] | ||

You can apply an affine transform to an image by creating a transform, placing the volume inside that transform in the ''Data'' module, and then selecting ''Harden Transform'' via the context-menu (right click on the image volume). This will move the image back out to the main level and "apply" the transform. It will, however, '''not resample''' the image data, but rather place the information about the new orientation into the image header. When the image is saved, this information is saved also as part of the file header, as long as orientation data is supported by the file format. If the saved volume is now loaded by another software that does not consider this header orientation (e.g. ImageJ) or does not visualize the image in physical space, then the image will appear in its old position. <br> | You can apply an affine transform to an image by creating a transform, placing the volume inside that transform in the ''Data'' module, and then selecting ''Harden Transform'' via the context-menu (right click on the image volume). This will move the image back out to the main level and "apply" the transform. It will, however, '''not resample''' the image data, but rather place the information about the new orientation into the image header. When the image is saved, this information is saved also as part of the file header, as long as orientation data is supported by the file format. If the saved volume is now loaded by another software that does not consider this header orientation (e.g. ImageJ) or does not visualize the image in physical space, then the image will appear in its old position. <br> | ||

| − | You can avoid this problem by actually resampling the image data. To do this, go to the [ | + | You can avoid this problem by actually resampling the image data. To do this, go to the [[Modules:ResampleScalarVectorDWIVolume-Documentation-3.6|Filtering/ResampleScalarVectorDWIVolume]] module, and select your image and transform as input, create a new volume as output and click Apply. This new volume will now be in the new orientation that will be retained if saved and reloaded elsewhere. |

<br> | <br> | ||

<br> | <br> | ||

| Line 470: | Line 469: | ||

==I have some DICOM images that I want to reslice at an arbitrary angle == | ==I have some DICOM images that I want to reslice at an arbitrary angle == | ||

| − | There's several ways to go about this. If you wish to register your image to another reference/target image, run one of the automated registration methods. If you wish to realign manually, most efficient way is to use the [ | + | There's several ways to go about this. If you wish to register your image to another reference/target image, run one of the automated registration methods. If you wish to realign manually, most efficient way is to use the [[Modules:Transforms-Documentation-3.6|''Transforms'']] module. Once you have the desired orientation, you need to apply the new orientation to the image. You can do this in 2 ways: 1) without or 2) with resampling the image data. |

#Without resampling: In the Data module, select the image (inside the transforms node) and select "Harden Transforms" from the pulldown menu. This will write the new orientation in physical space into the image header. This will work only if other software you use and the image format you save it as support this form of orientation information in the image header. | #Without resampling: In the Data module, select the image (inside the transforms node) and select "Harden Transforms" from the pulldown menu. This will write the new orientation in physical space into the image header. This will work only if other software you use and the image format you save it as support this form of orientation information in the image header. | ||

| − | # With resampling: Go to the [ | + | # With resampling: Go to the [[Modules:ResampleScalarVectorDWIVolume-Documentation-3.6|Filtering/ResampleScalarVectorDWIVolume]] module and create a new image by resampling the original with the new transform. This will incur interpolation blurring but is guaranteed to transfer for all image formats or software. |

| − | For more details on manual transform, see [[Slicer-3-6-FAQ#Can_I_manually_adjust_or_correct_a_registration.3F| this FAQ]] and the [ | + | For more details on manual transform, see [[Slicer-3-6-FAQ#Can_I_manually_adjust_or_correct_a_registration.3F| this FAQ]] and the [[Slicer_3.6:Training|Manual RegistrationTutorial here]]. |

= '''Developer FAQ''' = | = '''Developer FAQ''' = | ||

Latest revision as of 14:52, 27 November 2019

Home < Slicer-3-6-FAQBack to Slicer 3.6 Introduction

This page contains frequently asked questions related to Slicer 3.6

Contents

- 1 User FAQ: Installation & Generic

- 2 User FAQ: Data

- 3 User FAQ: Segmentation

- 4 User FAQ: Diffusion

- 5 User FAQ : installation & general use

- 6 User FAQ : Registration

- 6.1 How do I fix incorrect axis directions? Can I flip an image (left/right, anterior/posterior etc) ?

- 6.2 How do I fix a wrong image orientation in the header? / My image appears upside down / facing the wrong way / I have incorrect/missing axis orientation

- 6.3 How do I fix incorrect voxel size / aspect ratio of a loaded image volume?

- 6.4 I don't understand your coordinate system. What do the coordinate labels R,A,S and (negative numbers) mean?

- 6.5 My image is very large, how do I downsample to a smaller size?

- 6.6 How do I register images that are very far apart / do not overlap

- 6.7 How do I register a DWI image dataset to a structural reference scan? (Cookbook)

- 6.8 How do I initialize/align images with very different orientations and no overlap?

- 6.9 Can I manually adjust or correct a registration?

- 6.10 What's the difference between the various registration methods listed in Slicer?

- 6.11 What's the purpose of masking / VOI in registration?

- 6.12 Registration failed with an error. What should I try next?

- 6.13 Registration result is wrong or worse than before?

- 6.14 How many sample points should I choose for my registration?

- 6.15 I want to register two images with different intensity/contrast.

- 6.16 How important is bias field correction / intensity inhomogeneity correction?

- 6.17 Have the Slicer registration methods been validated?

- 6.18 I want to register many image pairs of the same type. Can I use Slicer registration in batch mode?

- 6.19 How can I save the parameter settings I have selected for later use or sharing?

- 6.20 Registration is too slow. How can I speed up my registration?

- 6.21 Registration results are inconsistent and don't work on some image pairs. Are there ways to make registration more robust?

- 6.22 One of my images has a clipped field of view. Can I still use automated registration?

- 6.23 I ran a registration but cannot see the result. How do I visualize the result transform?

- 6.24 What's the difference between Rigid and Affine registration?

- 6.25 What's the difference between Affine and BSpline registration?

- 6.26 The nonrigid (BSpline) registration transform does not seem to be nonrigid or does not show up correctly.

- 6.27 Can I combine multiple registrations?

- 6.28 Can I combine image and surface registration?

- 6.29 What's the difference between BRAINSfit and BRAINSDemonWarp?

- 6.30 Is the BRAINSfit registration for brain images only?

- 6.31 What's the difference between Fast Rigid and Linear Registration?

- 6.32 Which registration methods offer non-rigid transforms?

- 6.33 Which registration methods offer masking?

- 6.34 Is there a function to convert a box ROI into a volume labelmap?

- 6.35 BRAINSDemonWarp won't save the result transform

- 6.36 Physical Space vs. Image Space: how do I align two registered images to the same image grid?

- 6.37 Is there a way to perform an Eddy current correction on DWI in Slicer

- 6.38 The registration transform file saved by Slicer does not seem to match what is shown

- 6.39 How can I see the parameters of the function that describe a BSpline registration/deformation?

- 6.40 How can I convert a BSpline transform into a deformation field?

- 6.41 My reoriented image returns to original position when saved; Problem with the Harden Transform function

- 6.42 What is the Meaning of 'Fixed Parameters' in the transform file (.tfm) of a BSpline registration ?

- 6.43 I have some DICOM images that I want to reslice at an arbitrary angle

- 7 Developer FAQ

User FAQ: Installation & Generic

Is slicer3 ready for end users yet?

Yes! See the home page.

DLL Problems on Windows

I just download the 3D Slicer binaries for Windows and unpacked it. When I doubleclicked the file "slice2-win32-x86.ext", it gave out the error message can't find package vtk while executing "package require vtk" invoked from within "set::SLICER(VTK_VERSION) [package require vtk]" (file "C:/slicer2/Base/tck/Go.tck" line 483). We've seen this sort of thing happen when you have incompatible dll's installed. E.g. so programs will install a vtkCommon.dll into your window system folder and windows tries to use it instead of the one that comes with windows. You could try doing a search for vtk*.dll in your system and remove or rename any that are not in slicer as a test.

User FAQ: Data

I have a CT and would like to create an STL file

- If the CT is in DICOM format, use the File/Add Volume module to load the data into Slicer (see here for more about loading data).

- Use the Interactive Editor to create a label map which contains the structure you are interested in (look in the tutorial pages for how to use the editor)

- use the Modelmaker functionality to create a triangulated surface model

- use File/Save to save the model in STL format.

How do I load my DICOM DTI data into Slicer?

- Use the DICOM to nrrd converter to create a nrrd file

- load the nrrd file into slicer and begin processing (see the DTI tutorial for more information)

User FAQ: Segmentation

Interactive Editor: I would like to segment more than one structure

Use the Per-Structure Volumes feature to create label volumes for each structure that you would like to segment interactively. After successful segmentation of all the structures, the individual label volumes can be merged.

User FAQ: Diffusion

I have DICOM DWI images. What do I need to do to process them in Slicer?

DWI formats are not properly standardized (as of 2010). In many cases, vendors put important information about gradients into the private fields. Our DICOM to NRRD converter has an extensive library of such special cases. Convert your DWI images to a NRRD volume, before beginning the post processing.

I want to register my diffusion scan to a structural scan

I have streamlines from DTI and would like to find the subset which goes through a particular region

The ROI selection module in Slicer 3.6 allows to filter for streamlines which pass (or do not pass) through label map ROI's

User FAQ : installation & general use

User FAQ : Registration

How do I fix incorrect axis directions? Can I flip an image (left/right, anterior/posterior etc) ?

Sometimes the header information that describes the orientation and size of the image in physical space is incorrect or missing. Slicer displays images in physical space, in a RAS orientation. If images appear flipped or upside down, the transform that describes how the image grid relates to the physical world is incorrect. In proper RAS orientation, a head should have anterior end at the top in the axial view, look to the left in a sagittal view, and have the superior end at the top in sagittal and coronal views.

Yes, you can flip images and change the axis orientation of images in slicer. But we urge to use great caution when doing so, since this can introduce subtantial problems if done wrong. Worse than no information is wrong information. Below the steps to flip the LR axis of an image:

- Go to the Data module, right click on the node labeled "Scene" and select "Insert Transform" from the pulldown menu

- Move/drag your image into/onto the newly created transform

- Go to the Transforms module and select the newly created transform from the "Transform Node" menu.

- Click in the top left field of the 3x3 matrix, where you see the number 1.0. Hit the RETURN key to activate editing.

- Place a minus sign in front of the 1, then hit the RETURN key again. If you have your image in the axial slice view, you should see it flip immediately.

- Go back to the Data module, right click on your image and select Harden Transform from the pulldown menu.

- The image will move outside the transform. Your change of axis orientation has now been applied.

- Save your image under a new name, do not use a format that doesn't store physical orientation info in the header (jpg, gif etc).; also consider saving the transform as documentation to what change you have applied.

- Note that this is saved as part of the image orientation info and not as an actual resampling of the image, i.e. if you save your image and reload it in another software that does not read the image orientation info in the header (or displays in image space only), you will not see the change you just applied.

To flip the other axes do the same as above but edit the diagonal entries in the 2nd and 3rd row, for flipping anterior-posterior and inferior-superior directions, respectively.

How do I fix a wrong image orientation in the header? / My image appears upside down / facing the wrong way / I have incorrect/missing axis orientation

- Problem: My image appears upside down / flipped / facing the wrong way / I have incorrect/missing axis orientation

- Explanation: Slicer presents and interacts with images in physical space, which differs from the way the image is stored by a separate transform that defines how large the voxels are and how the image is oriented in space, e.g. which side is left or right. This information is stored in the image header, and different image file formats have different ways of storing this information. If Slicer supports the image format, it should read the information in the header and display the image correctly. If the image appears upside down or with distorted aspect ratio etc, then the image header information is either missing or incorrect.

- Fix: See FAQ above for a way to flip axes inside Slicer. You can also correct the voxel dimensions and the image origin in the Info tab of the Volumes module, and you can reorient images via the Transforms module. Reorientation however will work only if the incorrect orientation involves rotation or translation.

- To fix an axis orientation directly in the header info of an image file:

- 1. load the image into slicer (Load Volume, Add Data,Load Scene..)

- 2. save the image back out as NRRD (.nhdr) format.

- 3. open the .nhdr with a text editor of your choice. You should see a line that looks like this:

space: left-posterior-superior sizes: 448 448 128 space directions: (0.5,0,0) (0,0.5,0) (0,0,0.8)

- 4. the three brackets ( ) represent the coordinate axes as defined in the space line above, i.e. the first one is left-right, the second anterior-posterior, and the last inferior-superior. To flip an axis place a minus sign in front of the respective number, which is the voxel dimension. E.g. to flip left-right, change the line to

space directions: (-0.5,0,0) (0,0.5,0) (0,0,0.8)

- 5. alternatively if the entire orientation is wrong, i.e. coronal slices appear in the axial view etc., you may easier just change the space field to the proper orientation. Note that Slicer uses RAS space by default, i.e. first (x) axis = left-right, second (y) axis = posterior-anterior, third (z) axis = inferior-superior

- 6. save & close the edited .nhdr file and reload the image in slicer to see if the orientation is now correct.

How do I fix incorrect voxel size / aspect ratio of a loaded image volume?

- Problem: My image appears distorted / stretched / with incorrect aspect ratio

- Explanation: Slicer presents and interacts with images in physical space, which differs from the way the image is stored by a set of separate information that represents the physical "voxel size" and the direction/spatial orientation of the axes. If the voxel dimensions are incorrect or missing, the image will be displayed in a distorted fashion. This information is stored in the image header. If the information is missing, a default of isotropic 1 x 1 x 1 mm size is assumed for the voxel.

- Fix: You can correct the voxel dimensions and the image origin in the Info tab of the Volumes module. If you know the correct voxel size, enter it in the fields provided and hit RETURN after each entry. You should see the display update immediately. Ideally you should try to maintain the original image header information from the point of acquisition. Sometimes this information is lost in format conversion. Try an alternative converter or image format if you know that the voxel size is correctly stored in the original image. Alternatively you can try to edit the information in the image header, e.g. save the volume as (NRRD (.nhdr) format and open the ".nhdr" file with a text editor.

I don't understand your coordinate system. What do the coordinate labels R,A,S and (negative numbers) mean?

- It's very important to realize that Slicer displays all images in physical space, i.e. in mm. This requires orientation and size information that is stored in the image header. How that header info is set and read from the header will determine how the image appears in Slicer. RAS is the abbreviation for right, anterior, superior; indicating in order the relation of the physical axis directions to how the image data is stored.

- For a detailed description on coordinate systems see here.

My image is very large, how do I downsample to a smaller size?

Several Resampling modules provide this functionality. If you also have a transform you wish to apply to the volume, we recommend the [[Modules:ResampleScalarVectorDWIVolume-Documentation-3.6 ResampleScalarVectorDWIVolume] module, otherwise the ResampleVolume module. For an overview of Resampling tools see here.

Resampling in place:

- 1. Go to the Volumes module

- 2. from the Active Volume pulldown menu, select the image you wish to downsample

- 3. Open the Info tab. Write down the voxel dimensions (Image Spacing) and overall image size (Image Dimensions), e.g. 1.2 x 1.2 x 3 mm voxel size, 512 x 512 x 86. You will need this information to determine the amount of down-/up-sampling you wish to apply

- 4. Go to the Resample Volume module

- 5. In the Spacing field, enter the new desired voxel size. This is the above original voxel size multiplied with your downsampling factor. For example, if you wish to reduce the image to half (in plane), but leave the number of slices, you would enter a new voxel size of 2.4,2.4,3.

- 6. For Interpolation, check the box most appropriate for your input data: for labelmaps check nearest Neighbor, for 3D MRI or other bandlimited signals check hamming. For most others leave the linear default. The sinc interpolator (hamming, cosine, welch) and bspline (cubic) interpolators tend to produce less blurring than linear', but may cause overshoot near high contrast edges (e.g. negative intensity values for background pixels)

- 7.Input Volume: Select the image you wish to resample

- 8. Output Volume:Select Create New Volume for output volume, and rename to something meaningful, like your input + suffix "_resampled"

- 9. Click Apply

Resampling in place to match another image in size:

- 1. Go to the ResampleScalarVectorDWIVolume module

- 2. Input Volume: Select the image you wish to resample

- 3. Reference Volume: Select the reference image whose size/dimensions you want to match to.

- 4. Output Volume: Select Create New Volume for output volume, and rename to something meaningful, like your input + suffix "_resampled"

- 5. Interpolation Type: check the box most appropriate for your input data: for labelmaps check nn=nearest Neighbor, for 3D MRI or other bandlimited signals check ws=windowed sinc. For most others leave the linear default. The ws and bspline (cubic) interpolators (hamming, cosine, welch) tend to produce less blurring than linear', but may cause overshoot near high contrast edges (e.g. negative intensity values for background pixels)

- 6. Click Apply. Note that if the input and reference volume do not overlap in physical space, i.e. are roughly co-registered, the resampled result may not contain any or all of the input image. This is because the program will resample in the space defined by the reference image and will fill in with zeros if there is nothing at that location. If you get an empty or clipped result, that is most likely the cause. In that case try to re-center the two volumes before resampling.

Resampling in place by specifying new dimensions:

- 1. Go to the ResampleScalarVectorDWIVolume module

- 2. Input Volume: Select the image you wish to resample

- 3. Reference Volume: leave at "none"

- 4. Output Volume: Select Create New Volume for output volume, and rename to something meaningful, like your input + suffix "_resampled"

- 5. Interpolation Type: check the box most appropriate for your input data: for labelmaps check nn=nearest Neighbor, for 3D MRI or other bandlimited signals check ws=windowed sinc. For most others leave the linear default. The ws and bspline (cubic) interpolators (hamming, cosine, welch) tend to produce less blurring than linear', but may cause overshoot near high contrast edges (e.g. negative intensity values for background pixels)

- 6. Click Apply. Note that if the input and reference volume do not overlap in physical space, i.e. are roughly co-registered, the resampled result may not contain any or all of the input image. This is

- 6. Output Parameters: here you specify the new voxel size / spacing and dimensions. Note that you need to set both. If only the voxel size is specified, the image is resampled but retains its original dimensions (i.e. empty/zero space). If only the dimensions are specified the image will be resampled starting at the origin and cropped but not resized.

- new voxel size: calculate the new voxel size and enter in the Spacing field, as described above in in 'Resampling in place above, see step #5

- new image dimensions: enter new dimensions under Size. To prevent clipping, the output field of view FOV = voxel size * image dimensions, should match the input

- 7. leave rest at default and click Apply

How do I register images that are very far apart / do not overlap

- Problem: when you place one image in the background and another in the foreground, the one in the foreground will not be visible (entirely) when switching bak & forth

- Explanation:Slicer chooses the field of view (FOV) for the display based on the image selected for the background. The FOV will therefore be centered around what is defined in that image's origin. If two images have origins that differ significantly, they cannot be viewed well simultaneously.

- Fix: recenter one or both images as follows:

- 1. Go to the Volumes module,

- 2. Select the image to recenter from the Actrive Volume menu

- 3. Select the Info tab.

- 4. Click the Center Volume button. You will notice how the image origin numbers displayed above the button change. If you have the image selected as foreground or background, you may see it move to a new location.

- 5. Repeat steps 2-4 for the other image volumes

- 6. From the slice view menu, select Fit to Window

- 7. Images should now be roughly in the same space. Note that this re-centering is considered a change to the image volume, and Slicer will mark the image for saving next time you select Save.

How do I register a DWI image dataset to a structural reference scan? (Cookbook)

- Problem: The DWI/DTI image is not in the same orientation as the reference image that I would like to use to locate particular anatomy; the DWI image is distorted and does not line up well with the structural images

- Explanation: DWI images are often acquired as EPI sequences that contain significant distortions, particularly in the frontal areas. Also because the image is acquired before or after the structural scans, the subject may have moved in between and the position is no longer the same.

- Fix: obtain a baseline image from the DWI sequence, register that with the structural image and then apply the obtained transform to the DTI tensor. The two chief issues with this procedure deal with the difference in image contrast between the DWI and the structural scan, and with the common anisotropy of DWI data.

- Overall Strategy and detailed instructions for registration & resampling can be found in our [DWI registration cookbook]

- you can find example cases in the DWI chapter of the Slicer Registration Case Library, which includes example datasets and step-by-step instructions. Find an example closest to your scenario and perform the registration steps recommended there.

How do I initialize/align images with very different orientations and no overlap?