Slicer3:DTMRI:GeneralDiffusionFramework

Demian Wassermann, Raul San Jose, Lauren O'Donnell

The goal is to define the basic design and data structure specifications to support general diffusion models in the Slicer. General diffusion models refer to new ways of approaching diffusion imaging in the brain like the two tensor diffusion model [Sharon et al] and higher order spherical harmonics tensors [Decautox et al]

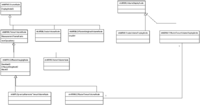

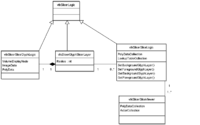

MRML Redesign

The generalization to general diffusion data models comes through a superclass that generalizes the original behaviour of vtkDiffusionTensorVolumeNode as described in the original design ([1] and [2])

This new class, vtkDiffusionImageVolumeNode, is a child of vtkMRMLTensorVolumeNode and abstracts the behaviour related to diffusion imaging. The main members of vtkDiffusionImageVolumeNode are:

- ID to the Diffusion Weighted Volume Node

- ID to the Baseline Image Volume Node

- ID to the Mask Image Volume Node.

As part of this redesign, the handling of IDs to the display node and storage node have been revamped to keep consistency. vtkMRMLVolumeNode is the class that keeps the DisplayNodeID and the subclassess take care of downcasting the node corresponding to that ID to make sure that the DisplayNode is the right one corresponding to the specific VolumeNode

The VolumeDisplayNode hierarchy has been refactored to better support display nodes that rely on glyphs as a display option. A new abstract class, vtkMRMLGlyphedDisplayNode, handles the different glyph properties. This class serves as parent class for vtkMRMLDiffusionTensorVolumeDisplayNode and vtkMRMLVectorVolumeDisplayNode.

Specifications to encapsulate General Diffusion Data into vtkImageData

Slicer 3 intrinsic image data representation is a vtkImageData class. This implies important limitations, namely

- The dimensionality is strictly 3D: the data is supposed to be arranged in a 3D grid and there is not possibilities for further extensions

- A specific set of point data attributes are allowed: Scalars, Normals, Vectors and Tensors.

There is not much we can do about dimensionality. However we can define a convention to be able to store point data attributes beyond the previously commented using new vtkDataArrays in the vtkFieldData. Then, our work now is to define a clear convention that allow us to make an unequivocal connection between the vtkDataArray an the representation associated to it.

Each vtkDataArray will be a new array with a predefined name in the vtkFieldData associated to the PointData (or CellData for that sake) of the vtkImageData. The DataArray will be a multicomponent DataArray. The components will have a predefined semantic meaning to encompass different data complexities.

| 'Array Name' | ' Number of Component' | 'Multicomponent Semantic' |

| Scalar | 1 | A standard scalar image |

| RGB | 3 | Color Image. First component is R channel, Second component is the G channel and Thrid component is the B channel |

| Symmetric-Tensor | 6 | A symmetric tensor. The data is arranged as follows: [dxx dxy dxz dyy dyz dzz] |

Design to dispaly glyphs

Glyphs is a common way to represent complex diffusion data that encodes information about the multiple orientation nature of the water diffusion in the brain. Glyphs are polygonal elements that can be associated to every location in the image grid.