Difference between revisions of "Documentation/4.0/Modules/OpenIGTLinkIF"

(Created page with '<!-- ---------------------------- --> {{documentation/{{documentation/version}}/module-header}} <!-- ---------------------------- --> <!-- ---------------------------- --> {{doc…') |

(Prepend documentation/versioncheck template. See http://na-mic.org/Mantis/view.php?id=2887) |

||

| (11 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| + | <noinclude>{{documentation/versioncheck}}</noinclude> | ||

<!-- ---------------------------- --> | <!-- ---------------------------- --> | ||

{{documentation/{{documentation/version}}/module-header}} | {{documentation/{{documentation/version}}/module-header}} | ||

| Line 17: | Line 18: | ||

}} | }} | ||

{{documentation/{{documentation/version}}/module-introduction-end}} | {{documentation/{{documentation/version}}/module-introduction-end}} | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

<!-- ---------------------------- --> | <!-- ---------------------------- --> | ||

| Line 35: | Line 31: | ||

*Slice driving: The module can control volume re-slicing plane based on linear transform in the MRML scene. | *Slice driving: The module can control volume re-slicing plane based on linear transform in the MRML scene. | ||

| − | + | [[image:Slicer3_OpenIGTLinkIF_Architecture.png|thumb|center|500px|The figure shows an example schematic diagram where multiple devices are communicating with 3D Slicer through the OpenIGTLink Interface. Each connector is assigned to one of the external devices for TCP/IP connection. The connectors serve as interfaces between the external devices and the MRML scene to convert an OpenIGTLink message to a MRML node or vice versa. ]] | |

|} | |} | ||

| + | <!-- ---------------------------- --> | ||

| + | {{documentation/{{documentation/version}}/module-section|Supported Devices}} | ||

| + | See [http://www.na-mic.org/Wiki/index.php/OpenIGTLink/List here] for a list of supported devices. | ||

<!-- ---------------------------- --> | <!-- ---------------------------- --> | ||

{{documentation/{{documentation/version}}/module-section|Use Cases}} | {{documentation/{{documentation/version}}/module-section|Use Cases}} | ||

| − | + | * '''MRI-compatible Robotic Systems''' (BRP Project between BWH, Johns Hopkins University and Acoustic MedSystems Inc., "Enabling Technologies for MRI-Guided Prostate Interventions") | |

| − | * | + | **The 3D Slicer was connected to an MRI-compatible Robot, using OpenIGTLinkIF to send target position from Slicer to the robot and to send the actual robot position (based on sensor information) from the robot back to Slicer. Slicer was also connected to the MRI scanner. Scan plane position and orientation were prescribed in Slicer and transmitted to the scanner for controlling real-time image acquisition and for transferring the acquired images from the MR scanner back into Slicer for display. |

| + | * '''Neurosurgical Robot Project'''(Nagoya Institute of Technology, Japan) | ||

| + | **The 3D Slicer was connected to the optical tracking system (Optotrak, Northern Digital Inc.) to acquire current position of the end-effector of the robot. | ||

<!-- ---------------------------- --> | <!-- ---------------------------- --> | ||

{{documentation/{{documentation/version}}/module-section|Tutorials}} | {{documentation/{{documentation/version}}/module-section|Tutorials}} | ||

| − | + | Please follow the [[Media:OpenIGTLinkTutorial_Slicer4.1.0_JunichiTokuda_Apr2012.pdf|OpenIGTLink IF Tutorial presentation file]]. | |

| − | |||

| − | |||

<!-- ---------------------------- --> | <!-- ---------------------------- --> | ||

{{documentation/{{documentation/version}}/module-section|Panels and their use}} | {{documentation/{{documentation/version}}/module-section|Panels and their use}} | ||

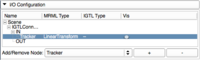

| − | + | A list of all the panels in the interface, their features, what they mean, and how to use them. | |

| − | |||

| − | |||

| − | |||

{| | {| | ||

| − | | | + | |[[Image:Slicer4-OpenIGTLinkIF-GUI.png|thumb|200px|OpenIGTLinkIF ]] |

| − | + | |[[Image:Slicer4-OpenIGTLinkIF-Connectors.png|thumb|200px|Connector Properties]] | |

| − | |[[Image: | + | |[[Image:Slicer4-OpenIGTLinkIF-IOConfiguration.png|thumb|200px|I/O Configuration Tree interface]] |

|} | |} | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

<!-- ---------------------------- --> | <!-- ---------------------------- --> | ||

{{documentation/{{documentation/version}}/module-section|Similar Modules}} | {{documentation/{{documentation/version}}/module-section|Similar Modules}} | ||

| − | + | N/A | |

<!-- ---------------------------- --> | <!-- ---------------------------- --> | ||

{{documentation/{{documentation/version}}/module-section|References}} | {{documentation/{{documentation/version}}/module-section|References}} | ||

| − | + | #'''Tokuda J''', Fischer GS, Papademetris X, Yaniv Z, Ibanez L, Cheng P, Liu H, Blevins J, Arata J, Golby A, Kapur T, Pieper S, Burdette EC, Fichtinger G, Tempany CM, Hata N, OpenIGTLink: An Open Network Protocol for Image-Guided Therapy Environment, Int J Med Robot Comput Assist Surg, 2009 (In print) | |

| − | |||

<!-- ---------------------------- --> | <!-- ---------------------------- --> | ||

{{documentation/{{documentation/version}}/module-section|Information for Developers}} | {{documentation/{{documentation/version}}/module-section|Information for Developers}} | ||

| − | {{documentation/{{documentation/version}}/module-developerinfo | + | {{documentation/{{documentation/version}}/module-developerinfo}} |

<!-- ---------------------------- --> | <!-- ---------------------------- --> | ||

| − | {{documentation/{{documentation/version}}/module-footer | + | {{documentation/{{documentation/version}}/module-footer}} |

<!-- ---------------------------- --> | <!-- ---------------------------- --> | ||

Latest revision as of 07:28, 14 June 2013

Home < Documentation < 4.0 < Modules < OpenIGTLinkIF

|

For the latest Slicer documentation, visit the read-the-docs. |

Introduction and Acknowledgements

|

This work is supported by NA-MIC, NCIGT, and the Slicer Community. This work is partially supported by NIH 1R01CA111288-01A1 "Enabling Technologies for MRI-Guided Prostate Interventions" (PI: Clare Tempany), P01-CA67165 "Image Guided Therapy" (PI: Ferenc Joelsz) and AIST Intelligent Surgical Instrument Project (PI: Makoto Hashizume, Site-PI: Nobuhiko Hata). | |||||

|

Module Description

|

The OpenIGTLink Interface Module is a program module for network communication with external software / hardware using OpenIGTLink protocol. The module provides following features:

The figure shows an example schematic diagram where multiple devices are communicating with 3D Slicer through the OpenIGTLink Interface. Each connector is assigned to one of the external devices for TCP/IP connection. The connectors serve as interfaces between the external devices and the MRML scene to convert an OpenIGTLink message to a MRML node or vice versa. |

Supported Devices

See here for a list of supported devices.

Use Cases

- MRI-compatible Robotic Systems (BRP Project between BWH, Johns Hopkins University and Acoustic MedSystems Inc., "Enabling Technologies for MRI-Guided Prostate Interventions")

- The 3D Slicer was connected to an MRI-compatible Robot, using OpenIGTLinkIF to send target position from Slicer to the robot and to send the actual robot position (based on sensor information) from the robot back to Slicer. Slicer was also connected to the MRI scanner. Scan plane position and orientation were prescribed in Slicer and transmitted to the scanner for controlling real-time image acquisition and for transferring the acquired images from the MR scanner back into Slicer for display.

- Neurosurgical Robot Project(Nagoya Institute of Technology, Japan)

- The 3D Slicer was connected to the optical tracking system (Optotrak, Northern Digital Inc.) to acquire current position of the end-effector of the robot.

Tutorials

Please follow the OpenIGTLink IF Tutorial presentation file.

Panels and their use

A list of all the panels in the interface, their features, what they mean, and how to use them.

Similar Modules

N/A

References

- Tokuda J, Fischer GS, Papademetris X, Yaniv Z, Ibanez L, Cheng P, Liu H, Blevins J, Arata J, Golby A, Kapur T, Pieper S, Burdette EC, Fichtinger G, Tempany CM, Hata N, OpenIGTLink: An Open Network Protocol for Image-Guided Therapy Environment, Int J Med Robot Comput Assist Surg, 2009 (In print)

Information for Developers

| Section under construction. |